How RFAS Works

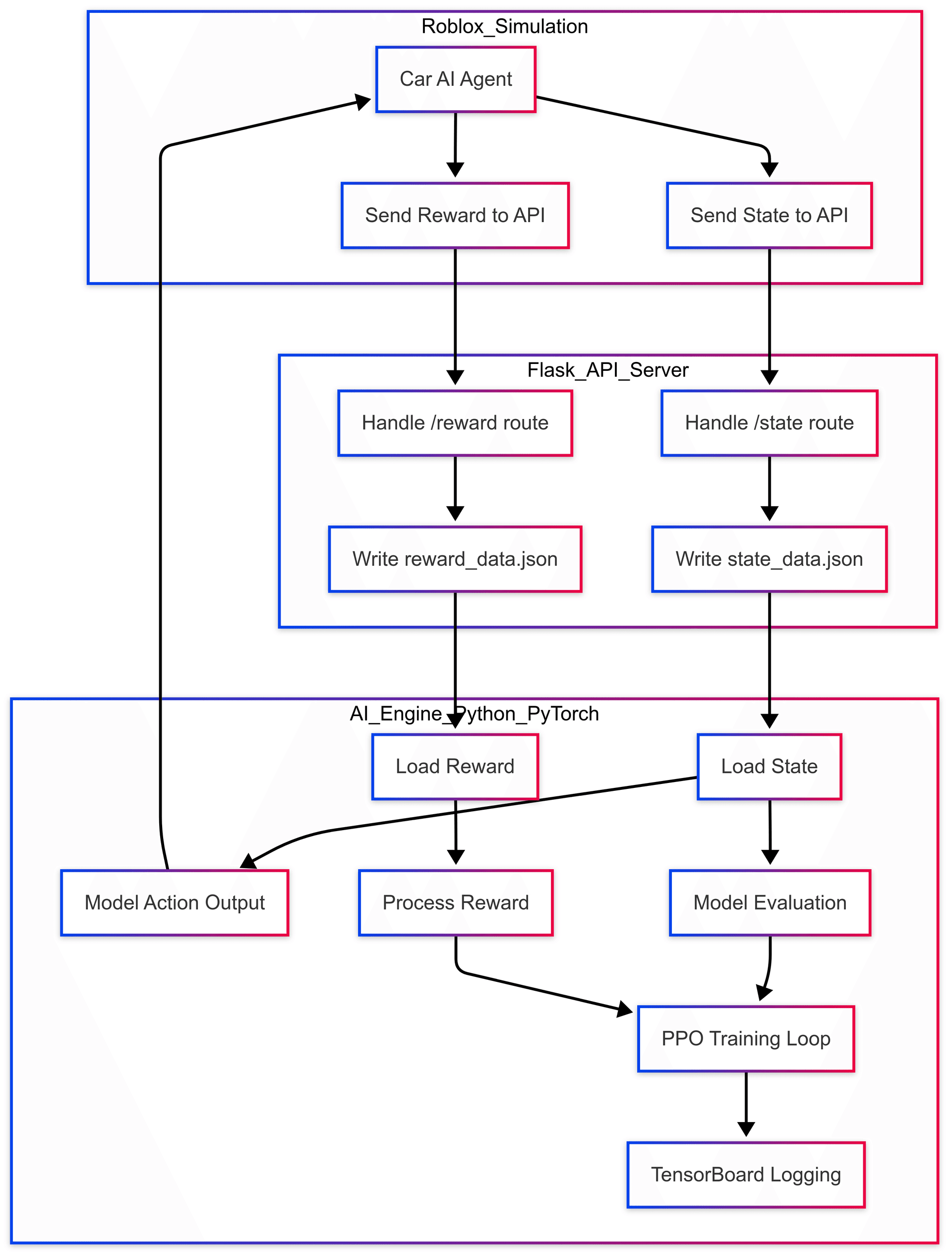

System Architecture

RFAS is built on a client-server architecture that enables communication between the Roblox game (client) and the AI model (server). Here's how the components work together:

- Roblox Client: Handles the game simulation, physics, and rendering. It sends state information to the API and receives control commands.

- Flask API: Acts as the bridge between Roblox and the AI model. It receives HTTP requests from Roblox, processes them, and forwards them to the model.

- PyTorch Model: The neural network trained using reinforcement learning that makes driving decisions based on the current state of the car.

- Training Module: Implements the PPO algorithm for updating the model based on rewards and experiences.

Data Flow

- 1

State Collection

The Roblox game collects the current state of the racing car, including:

- Current speed

- Position and orientation

- Distance to track edges

- Current throttle and steering values

- 2

API Communication

This state data is sent as a JSON payload to the Flask API endpoint via HTTP POST request.

POST /api/predict { "speed": 45.2, "distance_left": 3.5, "distance_right": 2.1, "current_throttle": 0.8, "current_steer": -0.2 ... } - 3

AI Processing

The PyTorch model processes the state data and determines the optimal control actions. The model outputs:

- Throttle value (0 to 1)

- Steering value (-1 to 1)

- 4

Response & Action

The API returns the control values to the Roblox game, which applies them to the car.

{ "throttle": 0.75, "steer": 0.3 }

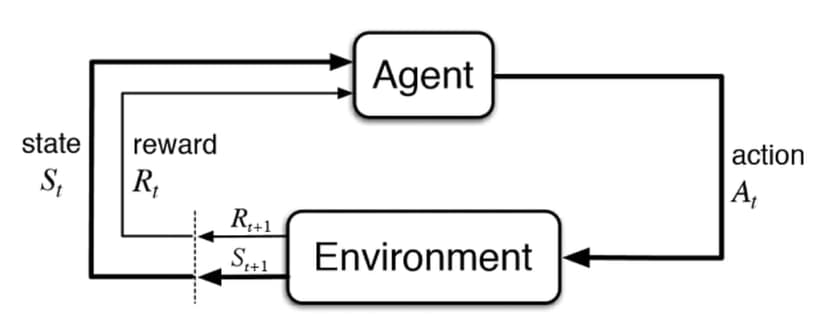

Reinforcement Learning

RFAS uses Proximal Policy Optimization (PPO), a state-of-the-art reinforcement learning algorithm. The training process works as follows:

- The AI agent (car) interacts with the environment (racetrack) by taking actions.

- The environment provides rewards based on performance (staying on track, maintaining speed, etc.).

- The PPO algorithm updates the policy to maximize these rewards over time.

- This process repeats, allowing the AI to improve its driving skills progressively.

Advantages of PPO

- Stable training process

- Sample efficient learning

- Continuous action space support

- Balance between exploration and exploitation

Implementation Steps

- 1

Setup the Python Environment

Install PyTorch, Flask, and other required libraries.

pip install torch flask numpy - 2

Create the AI Model

Build a neural network model using PyTorch that takes state information and outputs control commands.

class RacingNetwork(nn.Module): def __init__(self, input_dim, output_dim): super(RacingNetwork, self).__init__() # Define network layers self.layers = nn.Sequential( nn.Linear(input_dim, 128), nn.ReLU(), nn.Linear(128, 64), nn.ReLU(), nn.Linear(64, output_dim) ) def forward(self, x): return self.layers(x) - 3

Set Up the Flask API

Create a Flask server that handles requests from the Roblox game and returns predictions.

from flask import Flask, request, jsonify app = Flask(__name__) @app.route('/api/predict', methods=['POST']) def predict(): data = request.json # Process input data # Make prediction using the AI model result = model(process_input(data)) return jsonify({ 'throttle': float(result[0]), 'steer': float(result[1]) }) - 4

Implement PPO Training

Create the reinforcement learning loop that collects experiences and updates the model.

- 5

Configure Roblox to Connect with the API

Add scripts to your Roblox game that send state data and receive control commands.

Integration with Roblox

The final step is integrating RFAS with your Roblox racing game. This requires:

Roblox-side Implementation

- Scripts to gather car state data

- HTTP service to communicate with Flask API

- Functions to apply received control commands

- Logic to handle latency and connection issues

Model Deployment

- Running the Flask server

- Loading pre-trained models

- Model versioning and updates

- Performance monitoring and optimization